Hello? This is Jooyoung Kim, a mixing engineer and music producer. Today, I’ll talk about the music file codecs, final article of basics of mixing series. Those posts are based on my book, Basics of Mixing, published in South Korea.

Let’s dive in!

Codec

The term codec stands for coder-decoder—a hardware or software that encodes and decodes digital signals. There are three main types of codecs:

- Non-compression: WAV, AIFF, PDM(DSD), PAM

- Lossless Compression: FLAC, ALAC, WMAL

- Lossy Compression: WMA, MP3, AAC

Non-compression codecs retain 100% of the original audio data with no compression applied.

Lossless compression codecs reduce file size while preserving all original data. This means they sound identical to uncompressed formats like WAV.

Lossy compression codecs remove some audio data to achieve a much smaller file size, which can affect sound quality depending on the compression level.

In the music industry, WAV, MP3, and FLAC are the most commonly used formats for mastering and distribution.

How is file size determined?

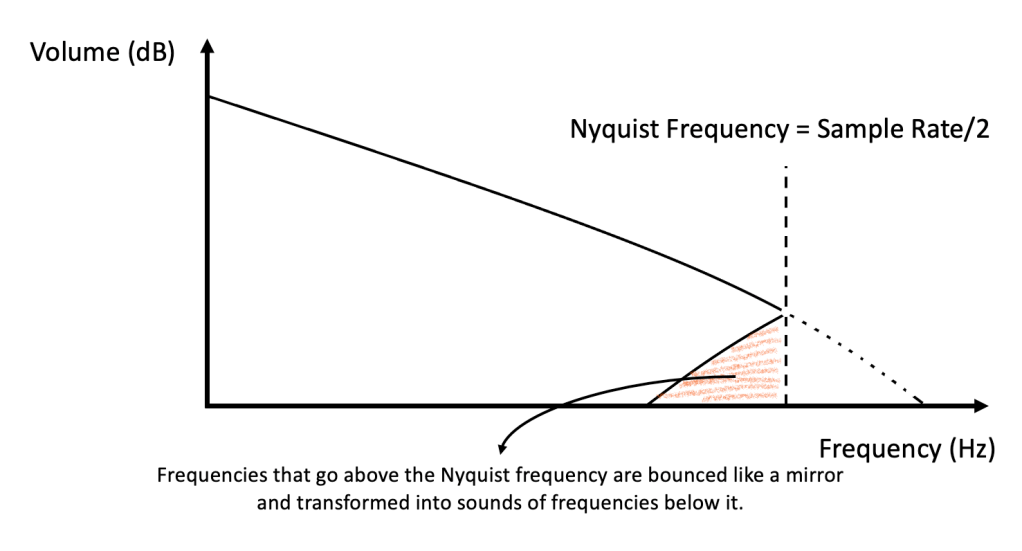

For WAV files, size is determined by sample rate and bit depth. How about mp3 and FLAC?

MP3 files use bitrate, rather than sample rate and bit depth. You’ve probably seen MP3 files labeled 256kbps or 320kbps. This means 256,000 bits or 320,000 bits of audio data are processed per second. Higher bitrates result in better sound quality but larger file sizes.

FLAC files use compression level to control file size. A higher compression level takes longer to encode but results in a smaller file. However, since FLAC is lossless, the sound quality remains unchanged regardless of the compression level.

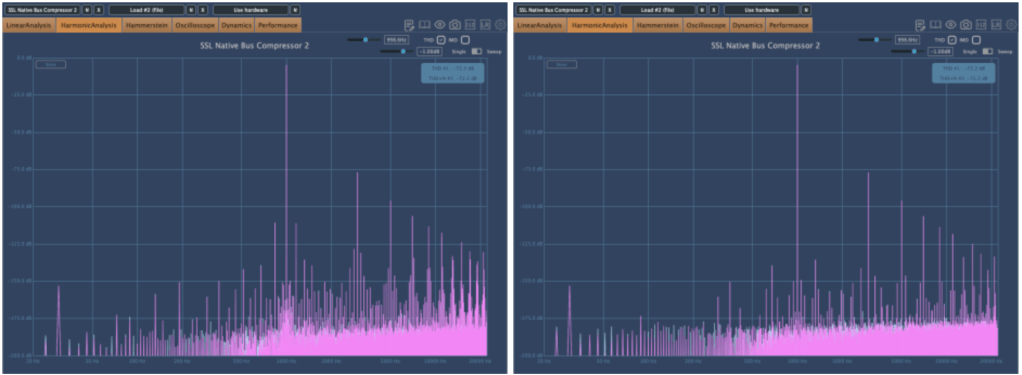

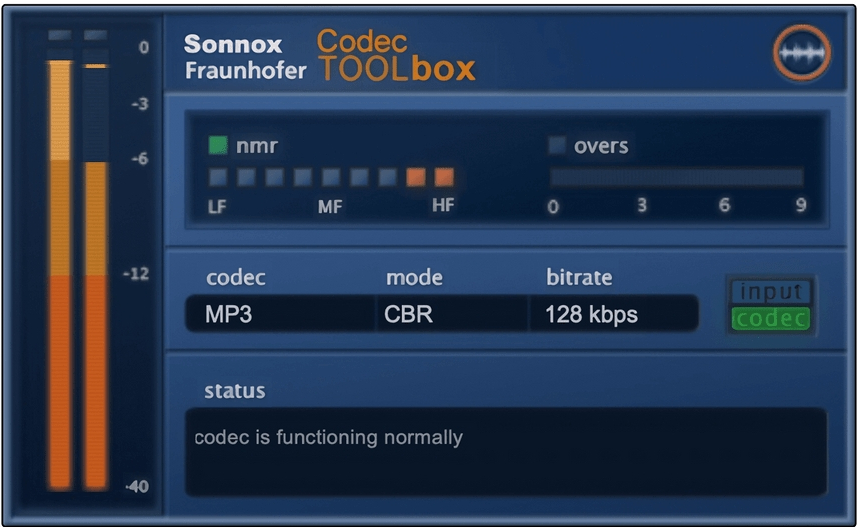

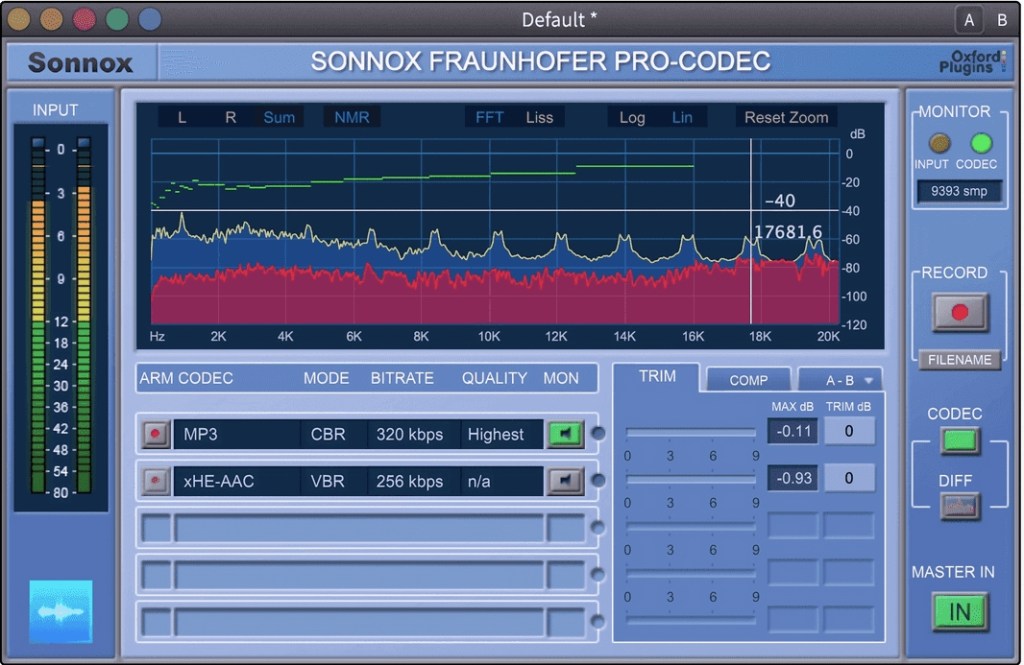

If you want to compare how different codecs affect sound quality, you can use tools like Sonnox Codec Toolbox or Fraunhofer Pro-Codec.

This is the last article for the ‘Basics of Mixing’ series. Time is really quick..haha.

I hope these posts have helped expand your knowledge and improve your mixing skills.

Thanks for reading, and I’ll see you in the next post!