Hello, this is Jooyoung Kim, music producer and audio engineer. Today, I’ll be discussing the history and various types of reverb.

Shall we dive in?

The image above is from a paper on “Acoustic Pots” found in ancient and medieval architecture. These pots were embedded in walls to function as a type of Helmholtz Resonator.

That might sound too technical, but a Helmholtz resonator is essentially a device that reduces specific frequencies. Modern-day applications include using this concept in car design, though that’s a more recent development.

In ancient times, the Aztecs built temples like Kukulkan Temple, which used echo to create fascinating sounds.

The Greeks also designed spaces with excellent acoustic properties, enabling sound to be heard clearly from specific spots.

Back then, without microphones, these architectural advances allowed sound to be projected effectively, and this often included reverb.

Moving to the Modern Era

In the modern era, Bill Putnam, the founder of Urei, which later became Universal Audio, was the first to experiment with reverb. He used it in the song “Peg o’ My Heart” by the Harmonicats.

For this track, they recorded instruments, played the sound in a studio bathroom, and re-recorded it to capture the reverb. If you’ve ever sung in the shower, you’ll know exactly the kind of reverb I’m talking about! This was the earliest form of what we now call an echo chamber.

Echo Chamber (Chamber Reverb)

Inspired by this, studios started building dedicated rooms for reverb, known as echo chambers.

The image above shows one of the echo chambers at the famous Abbey Road Studio. For those familiar with plugins, this might ring a bell.

Waves created a plugin called Abbey Road Chambers, which is based on impulse responses (IR) from these very rooms. Notice the tile walls—similar to bathroom tiles—used to reflect sound. The process involves playing sound through speakers and capturing it with microphones.

By the way, the classic speakers you see in that plugin are B&W 800D speakers. The 800 series is a dream for many, though the price is quite steep, even second-hand. Hopefully, I’ll own a pair of 801D4s someday…

Anyway, there are plenty of plugins that emulate these echo chambers. While the sound quality is great, the cost of building these rooms is astronomical.

Imagine dedicating an entire room just for reverb—it’s quite an investment! Unless, of course, money is no object…

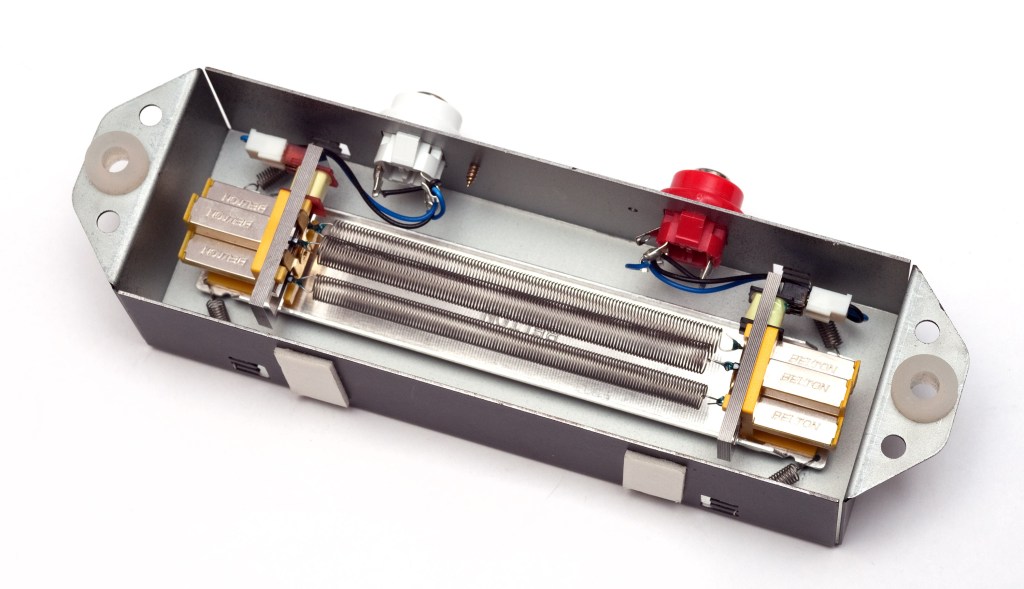

Plate Reverb

Plate reverb was developed as a more cost-effective alternative to the echo chamber.

This type of reverb works by vibrating a metal plate, with microphones attached to capture the sound. The tone varies depending on the type of metal used, giving plate reverb its distinctive sound.

While these units could weigh up to 250kg and were still quite expensive, they were far more affordable than building a dedicated reverb room.

Digital Reverb

To reduce the size and cost further, digital reverb was invented. The image above shows the first commercial digital reverb, the EMT 250.

Spring Reverb

Spring reverb was originally developed for use in Hammond organs to create reverb effects.

The technology was later licensed to Fender, leading to the inclusion of spring reverb in Fender guitar amps. Its function is similar to plate reverb, and because it’s been embedded in guitar amps for so long, it has a familiar and pleasant sound when used with guitars.

Shimmer Reverb

Shimmer reverb adds pitch modulation to the reverb, producing a characteristic shimmering effect. It’s perfect for when you want that lush, expansive sound.

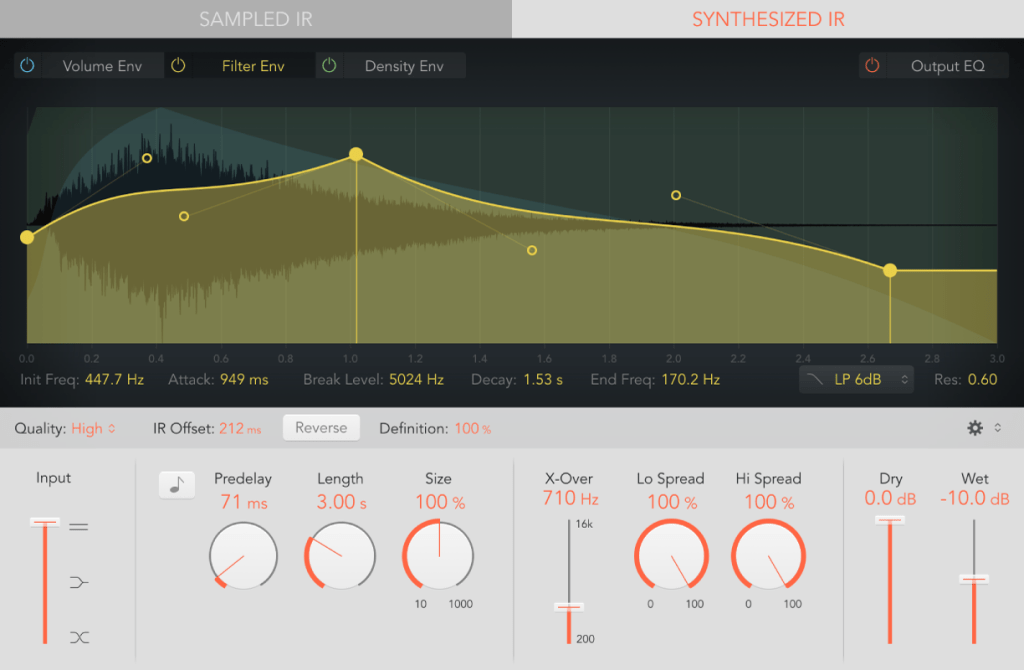

Hall, Studio, and Other Reverbs (Convolution Reverb)

Reverbs like hall or room reverb are actually quite tricky to classify. These reverbs are generated using an impulse response (IR) of real spaces through a process called convolution.

Let me briefly explain what an impulse is: it’s a very short, high-amplitude signal. Mathematically, it’s known as a Dirac delta function, where:

- if x = 0, y = ∞

- if x ≠ 0, y = 0

- and its integral from -∞ to ∞ equals 1.

This impulse can be used to measure the frequency response of speakers. When measuring a space, we use a signal known as a sine sweep, which is recorded and mathematically transformed through deconvolution to generate the impulse response.

While this might sound complicated, you can think of it as recording a sine sweep in WAV format and using it to create a reverb through calculation.

With IR reverbs, you can also use other sounds like snare hits or kick drum samples as IR files to create unique effects.

Logic has its Space Designer plugin for this,

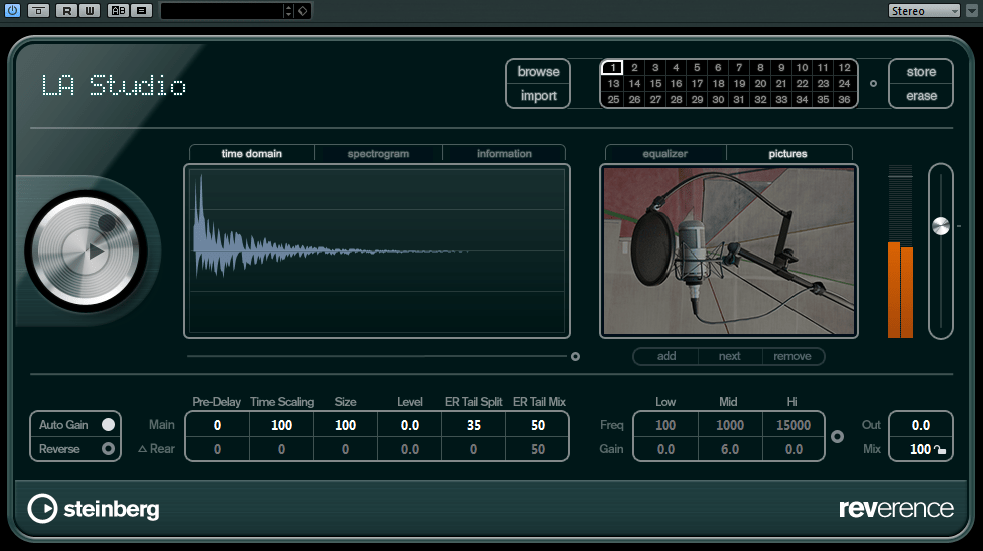

Cubase uses Reverence,

and Pro Tools has Space.

In the end, the reverbs we use on our computers can be divided into two types: algorithmic reverb and convolution reverb.

Conclusion

That covers the history and types of reverb. I may have gone off on a tangent at times, but if some of it was too complex, don’t worry! You don’t need to fully understand every detail—music is all about what sounds good, after all.

On a different note, I finally received permission from Universal Audio to use some photos for my book. I’ll post an update when the book is ready!

See you in the next post!