Hi everyone! This is Jooyoung Kim, a mixing engineer and music producer.

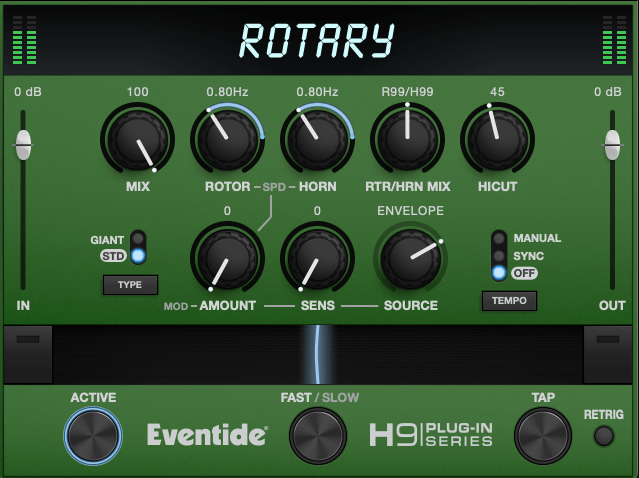

Today, I want to talk about modulation effects, which are often overlooked during mixing.

(These concepts are based on my book Basics of Mixing, published in Korea.)

What are Modulation Effects?

In simple terms, modulation effects involve changing certain parameters over time.

Do you frequently use modulation effects when composing or mixing?

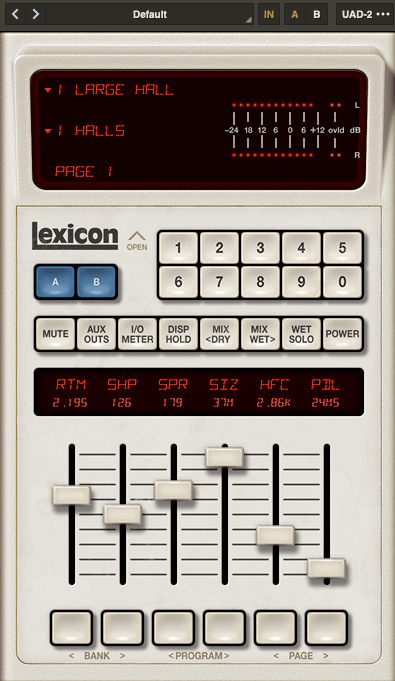

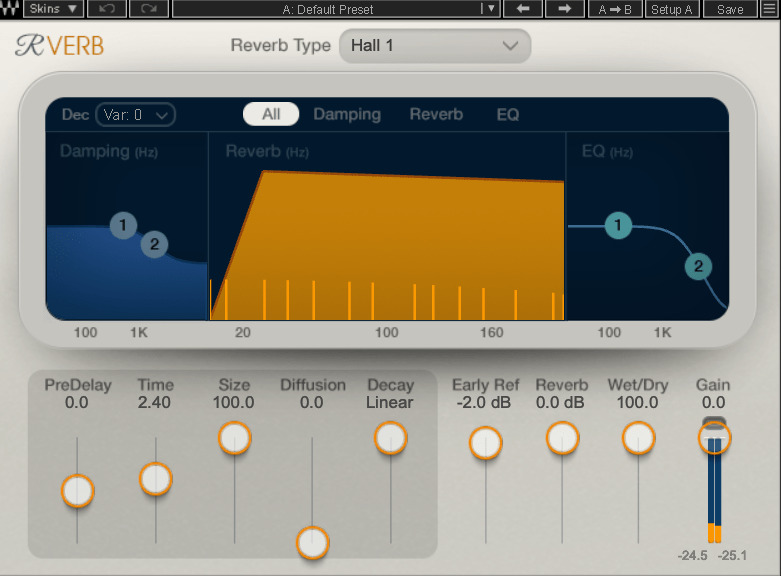

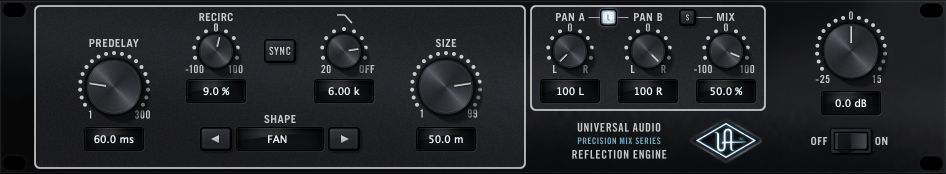

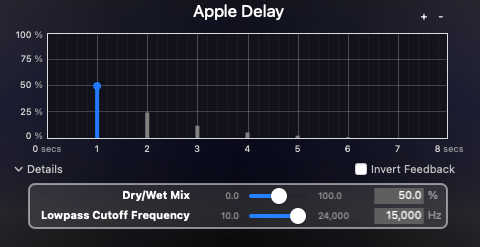

I believe modulation effects, along with reverb and delay, are crucial in determining the quality of a track. However, even if you understand the theory behind these effects, you might hesitate to use them if you’re unfamiliar with how they sound in practice.

That’s why I encourage you to experiment with modulation effects regularly, even if it feels forced at first.

Types of Modulation Effects

Let’s break down some common modulation effects:

- Tremolo

- Vibrato

- Flanger

- Phaser

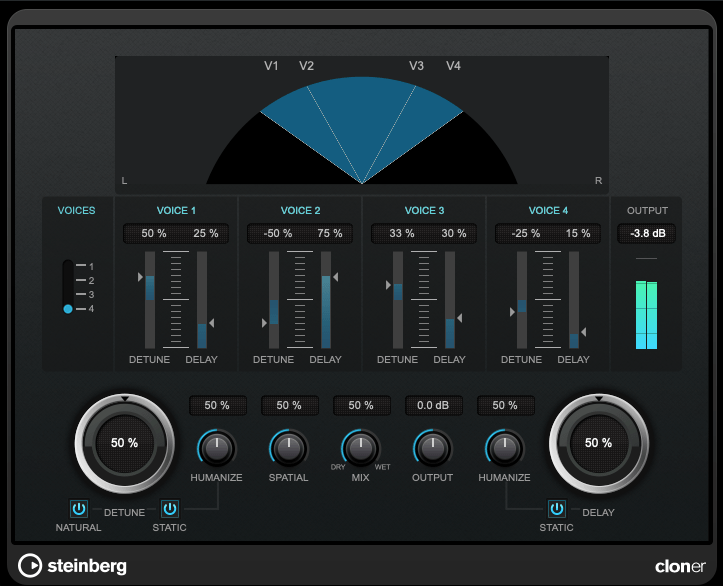

- Chorus

Before diving into these, we need to discuss two foundational concepts: the All-Pass Filter and the Comb Filtering Effect.

All-Pass Filter

An all-pass filter allows all frequencies to pass through unchanged. But why would we use such a filter?

The answer lies in phase.

When a signal passes through an all-pass filter, the phase shifts depending on the frequency. Combining this filtered signal with the original creates unique sounds due to constructive and destructive interference at different frequencies.

For more details on phase and interference, check out “Basics of Mixing – 2.2 Phase and Interference.”

Comb Filtering Effect

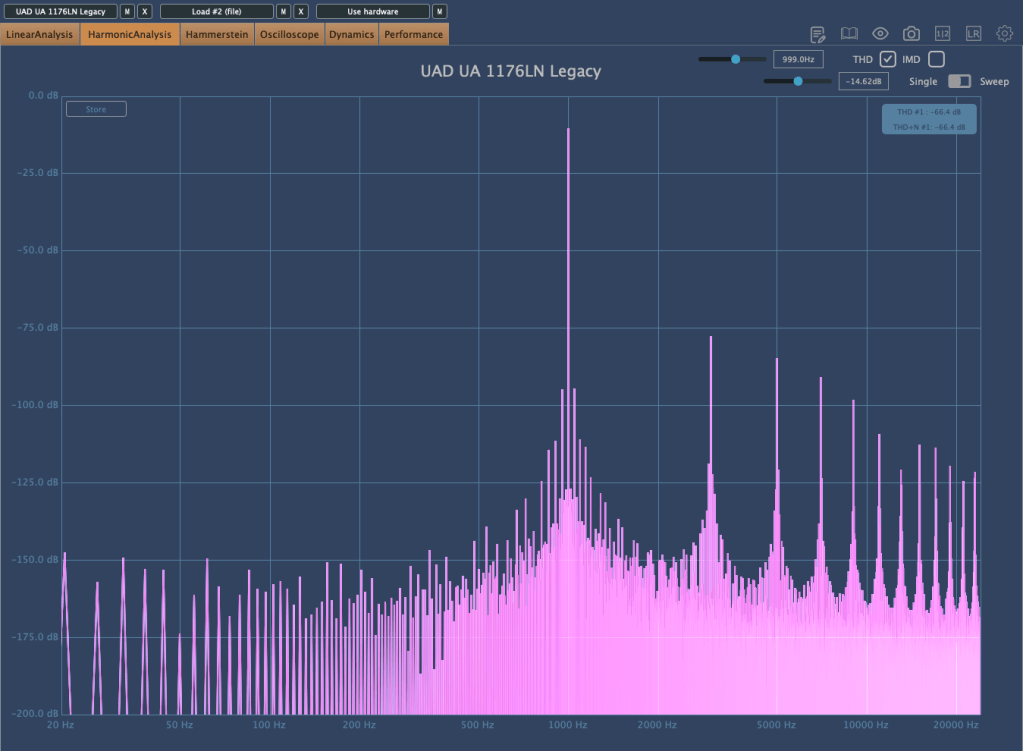

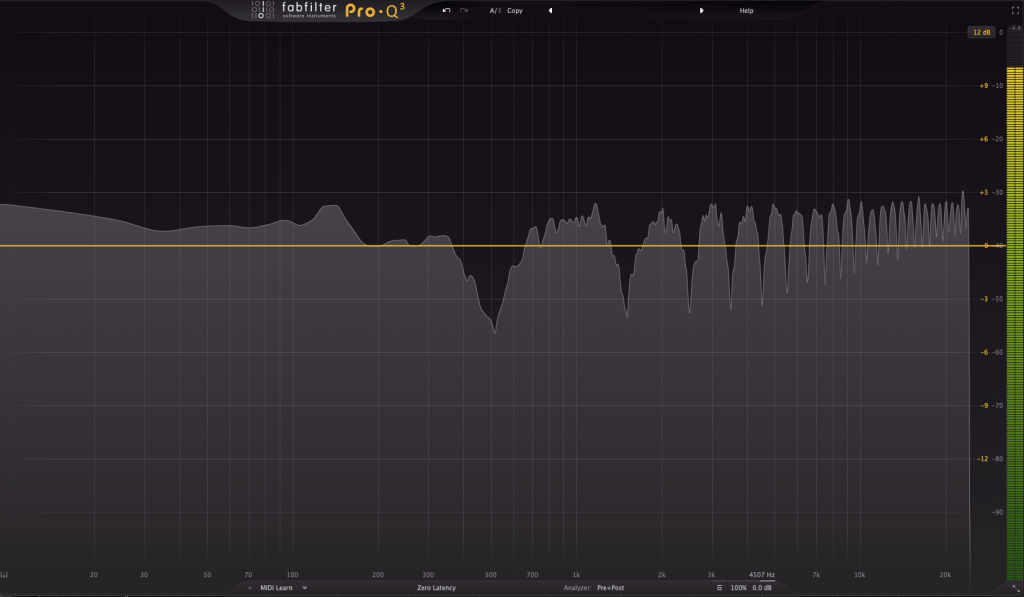

The comb filtering effect occurs when an original signal is combined with a delayed version of itself. This results in a frequency response that looks like the teeth of a comb.

It’s easy to understand this concept through simple experiments.

When every frequency in a signal is delayed by the same amount, some frequencies cancel out (destructive interference), while others amplify (constructive interference). This creates the characteristic comb-like frequency response.

Effects like flanger, phaser, and chorus are built on these principles of phase manipulation.

That’s all for now! In the next post, I’ll delve deeper into each modulation effect.

See you next time! 😊